Introduction to Quantum Error Correction

Introduction

Over the past weeks, we have covered the two ends of the quantum computing stack.

We started with the physical platforms: neutral atoms trapped, trapped ions, superconducting circuits, photons, and quantum dots.

We then moved to quantum algorithms.

We studied Shor's factoring algorithm, we explored Grover's search algorithm and its quadratic speedup through amplitude amplification.

We also confronted the resource estimation reality check: factoring a 2048-bit RSA key requires millions of physical qubits and hours/days of coherent operation, far beyond what any current device can provide.

The gap between the error rates of today's physical qubits and the requirements of fault-tolerant algorithms is the central challenge of the field.

This is not a nice-to-have problem: it is the bottleneck standing between quantum computing theory and reality.

Today's lecture addresses this gap with Quantum Error Correction (QEC).

We will see how to protect fragile quantum information from noise by encoding it redundantly across many physical qubits, creating robust logical qubits.

We begin with classical error correction to build intuition, then confront the unique challenges of the quantum world (eg the No-Cloning Theorem, measurement back-action), and progressively build up from simple repetition codes, the most classical of QEC codes, to the Shor code, the stabilizer formalism, and finally the surface code.

We will also discuss fault-tolerant circuit design, logical gates, and the threshold theorem that guarantees error correction works even with imperfect components.

These notes were written for the Experimental Quantum Computing and Error Correction course of M2 Master QLMN, see the course's website for more information.

You are not expected to solve any exercice from today's lecture.

Instead, read Acharya et al, Quantum error correction below the surface code threshold, 2025 (https://www.nature.com/articles/s41586-024-08449-y) 1.

We'll discuss it during next lecture.

Quiz: Exponential Suppression of Errors in QEC

Let's begin by discussing the paper from Google Quantum AI team untitled "Exponential suppression of bit or phase errors with cyclic error correction." Nature 595, 383-387 (2021). DOI: 10.1038/s41586-021-03588-y 2.

Here are 5 questions to check our understanding.

Which quantum error correction code structure was primarily implemented to demonstrate the exponential suppression of errors in this experiment?

A. A 2D surface code measuring both

B. A 1D repetition code embedded in a 2D grid, configured separately for either bit-flip or phase-flip protection.

C. A Shor code encoding 1 logical qubit into 9 physical qubits.

D. A bosonic cat code using superconducting cavities.

Correct Answer: B

The experiment implemented a 1D repetition code embedded in the 2D Sycamore chip grid.

The code was configured separately for bit-flip protection (using

According to the experimental results, how does the logical error probability per round (

A. Linearly:

B. Quadratically:

C. Exponentially:

D. The logical error rate remained constant as

Correct Answer: C

The main result of the paper is the exponential suppression of logical errors with code distance.

Which of the following correctly describe the scale of the repetition code experiments performed? (Select all that apply)

A. The code distances ranged from

B. The largest code used 21 superconducting qubits (11 data qubits, 10 measure qubits).

C. The experiment was limited to

D. The logical error suppression was shown to be stable over 50 rounds of error correction.

Correct Answers: A, B, D

The experiment demonstrated codes from

The Sycamore chip had sufficient qubits to go well beyond

How did the experiment address the distinction between bit-flip and phase-flip errors? (Select all that apply)

A. A single surface code patch corrected both error types concurrently.

B. The "Phase-Flip Code" used

C. The "Bit-Flip Code" used

D. Phase-flip errors were found to be negligible compared to bit-flip errors.

Correct Answers: B, C

The experiment ran two separate configurations.

The bit-flip code measured

Each configuration protects against only one error type at a time.

What conclusion did the authors reach regarding the physical error mechanisms in the device?

A. The errors were dominated by non-local cosmic ray events that destroyed the code immediately.

B. The device performance was well-described by a simple uncorrelated depolarizing error model.

C.

D. Correlated errors were significant enough to prevent any error suppression as distance increased.

Correct Answer: B

The paper found that the device noise was well-modeled by simple uncorrelated Pauli errors, which is why the exponential suppression with distance was observed.

Correlated errors were present but subdominant.

Classical Error Correction

Before diving into the quantum realm, let's build intuition from the classical world.

To understand why we need quantum codes, we first need to appreciate how we protect classical information from noise.

This section draws inspiration from the foundational concepts of information theory ?.

The Noisy Channel

Imagine we want to send a single bit of information, either a

In a perfect world, what you send is what you get.

But physical systems are rarely perfect.

We face two primary challenges:

- Transmission Noise: Sending a bit via a noisy line where there is a non-zero probability that the bit flips during transit.

- Storage Decay: Storing a bit in memory where, over time, thermal fluctuations or cosmic rays might cause the bit to flip with a probability

.

The question is: How can we increase the chance of successful transmission or storage despite this inherent noise?

Redundancy and the Majority Vote

The most intuitive solution is redundancy.

If a single bit is fragile, why not use many?

This is the core idea behind the Classical Repetition Code.

Instead of storing a single bit, we store multiple copies of that same bit and periodically perform a "sanity check" using a Majority Vote algorithm.

For this introductory derivation, we assume that the process of "reading" the bits to take a majority vote is itself perfect and instantaneous.

We are only concerned with the errors occurring in the bits themselves during the "waiting" or "traveling" periods.

If we use 3 bits to represent a single logical

If a single bit flips to

However, if two bits flip, the majority vote fails, and we incorrectly conclude the logical bit was a

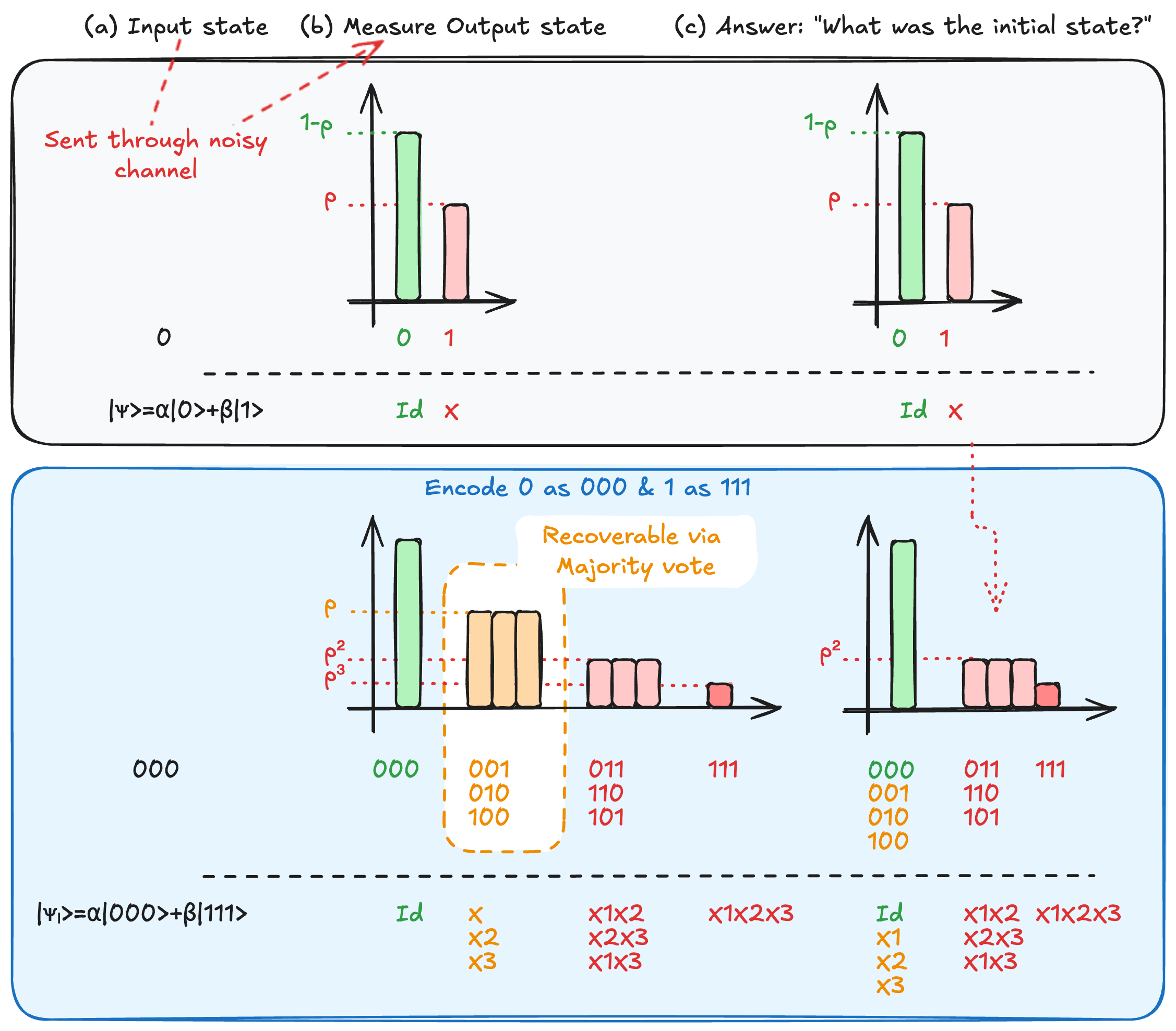

This error suppression mechanism is illustrated in Fig..

Lexicon and Definitions

To speak the language of error correction, we must define two critical terms:

- Logical States: These are the "effective" qubit states we care about. Remember that for a physical qubit also, we emphasised that qubit states do not exist, they are encoded in physical Hilbert space. For a repetition code, we thus define:

- Code Distance (

): This is the minimal number of physical bit flips required to transform a valid into a valid . - For a 3-bit code (

), you need 3 flips to go from to . - For a 5-bit code (

), you need 5 flips.

- For a 3-bit code (

A code with distance

Mathematically, the code fails only if at least

So a distance-

Furthermore, there is a distinction between detecting and correcting errors.

A code can detect up to

If the probability of a single bit flipping is

For a

As long as

This exponential suppression with distance is the whole point of error correction.

Consider a classical

- Calculate the probability that the majority vote fails (i.e., 3 or more out of 5 bits flip).

- Compare this to the uncoded error probability

. - What is the ratio of encoded to unencoded error probability? What does this tell you about the value of redundancy?

- The majority vote fails when 3, 4, or 5 bits flip.

Using the binomial distribution:

-

The unencoded error probability is

. -

The ratio is approximately

.

The 5-bit code suppresses the error rate by four orders of magnitude.

Redundancy pays off whenis small.

The Cost of Redundancy

While redundancy grants us protection, it is not free.

We must consider the resource overhead.

If we want to encode

| Metric | Calculation | Value |

|---|---|---|

| Data ( |

The original information | 4 bits |

| Encoded ( |

Total physical bits ( |

12 bits |

| Redundancy | 8 bits | |

| Rate ( |

The physical scaling is linear: if you have

The efficiency of the encoding becomes a challenge.

We have seen that we can achieve high protection by increasing

The question becomes how can we increase the Rate (

This question leads us directly into the world of Linear Codes and, eventually, Quantum Error Correcting Codes.

Hamming Code: Parity and the Price of Information

In the 1940s at Bell Labs, Richard Hamming was frustrated by the fragility of electromechanical computers, which frequently crashed due to bit flips.

Here is an excerpt of the Hamming code Wikipédia page:

Hamming worked on weekends, and grew increasingly frustrated with having to restart his programs from scratch due to detected errors.

In a taped interview, Hamming said, "And so I said, 'Damn it, if the machine can detect an error, why can't it locate the position of the error and correct it?'".

This led to the development of the Hamming Code, a method of checking the parity of data bits to increase the reliability (the rate) of information transfer.

The idea behind Hamming codes is to add a specific number of redundant bits, which we often call ancilla bits (specifically in the context of our QEC circuits).

These ancilla bits allow us to detect that an error has occurred and uniquely identify where (on which data qubit) it happened.

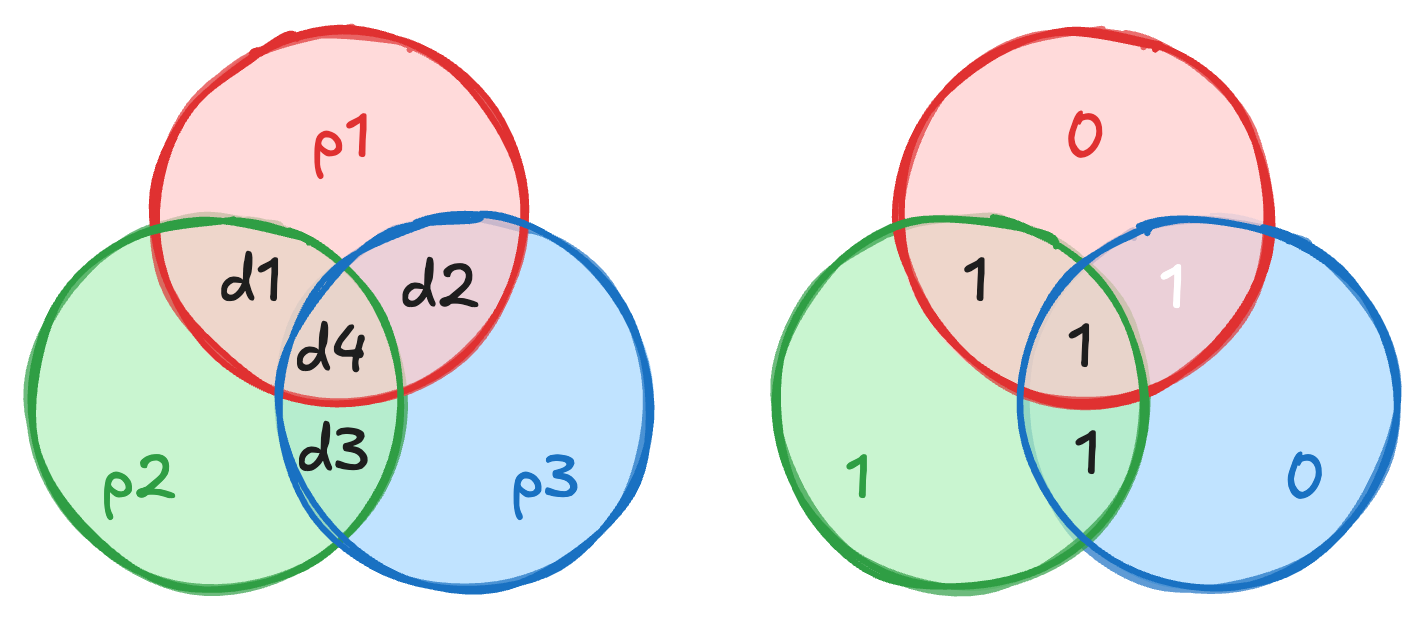

Geometric Intuition: The Hamming(7,4) Code

Consider

To protect these, we add three parity bits:

A good way to visualize this is through a Venn diagram where the four data bits sit in the intersections of three overlapping circles, see Fig..

Each parity bit is responsible for maintaining an even parity within its respective circle:

is the parity of and . is the parity of and . is the parity of and .

We can also represent this relationship using a parity-check matrix where each row shows which data bit is involved in a given parity bit:

| Parity | ||||

|---|---|---|---|---|

| x | x | x | ||

| x | x | x | ||

| x | x | x |

Mathematically, we define the parity such that the sum (modulo 2) of the bits in a circle is zero.

For example,

Error Identification and the Syndrome

What makes this data+parity bits system work is univocal identification.

If any given data bit flips, it will change the parity of the specific circles it belongs to such that by reading the set of parity violations, also called the syndrome, we obtain an "error location address" that tells us where the error occurred.

Suppose our data is

We would observe that the new values of the parity checks associated with

Looking at our map,

From a linear algebra perspective, this is handled by the Code Generator Matrix.

Looking back at our redundancy table, while a simple repetition code (1 data bit with 2 "copy" ancilla bits) provides high redundancy but low efficiency, the Hamming(7,4) code strikes a balance.

With

This scaling is efficient: the redundancy grows only as

Consider a Hamming(7,4) code with data bits

- Compute the three parity bits

. - Suppose

is flipped. What syndrome do you observe (which parity checks fail)? - Verify that the syndrome uniquely identifies

as the corrupted bit.

- With

: - If

flips ( ), recompute parities: : (unchanged, passes) : ( fails) : ( fails) - Syndrome:

OK, FAIL, FAIL.

- Looking at the parity-check matrix,

participates in and but not . This matches the observed syndrome, uniquely identifying .

From Classical to Quantum: The Measurement Back-Action

As we move from the classical regime to the quantum one, the motivation for encoding via parity bits changes fundamentally.

In the classical world, adding parity encoding ancilla bits is an efficiency-driven choice: we do it to avoid the cost of re-calculating or re-storing data.

In the quantum world, however, encoding data parity in parity storing ancilla qubits becomes a physical necessity.

This is due to two things: the No-cloning theorem that says that we cannot simply clone aka copy quantum states (said in another way, there is no unitary operation

This last one implies that if we were to measure our data qubits encoding an arbitrary quantum state directly to check for errors, we would collapse their superposition, destroying the very quantum information we are trying to protect.

Instead, we must map the parity information onto ancilla qubits and measure only those, allowing us to extract the syndrome without "touching" the data itself.

This insight is at the heart of the stabilizers, as established in the foundational work of 3 and that we will discuss after looking at simpler examples first.

Compare the code rate

- A 3-bit repetition code encoding 1 logical bit.

- A Hamming(7,4) code encoding 4 logical bits.

- A Hamming(15,11) code encoding 11 logical bits.

- What general trend do you observe? Why is this relevant for quantum codes?

-

-

-

-

The rate increases as we encode more data bits with the Hamming family.

The number of parity bits grows as, so the overhead becomes a smaller fraction.

For quantum codes, this trade-off is even more critical because each physical qubit is expensive (in terms of hardware and control), making high-rate codes desirable.

Assume a unitary

Apply

By linearity of

This is a Bell state (maximally entangled), not the product state

A machine that correctly copies

No single unitary can clone all states, so a universal quantum cloning machine is impossible.

This is why quantum error correction must use entanglement rather than copying.

Quantum Repetition Code

To bypass the measurement problem, we use a Repetition Code that follows the key concepts of the classical one but tailored for quantum states.

While this code only protects against one type of error at a time, specifically bit-flips (

Instead of checking the value of qubit 1 and then qubit 2, we ask a relational question, just like in Hamming codes: "Are these two qubits the same or different?"

This question has a binary answer:

This tells us if an error occurred without revealing whether the qubits are in state

Said in another way, if we consider a general 2-qubit state:

Measuring the qubits

For instance, if we found

The 3-Qubit Bit-Flip Code

To build our intuition, let's look at the simplest possible instance: protecting against a single bit-flip error (

Again, we borrow the classical idea of redundancy but adapt it to the quantum regime.

We define our logical basis states by the mapping:

An arbitrary physical state

If a bit flip occurs on the first qubit, the state becomes

Our parity measurements would reveal that the first and second qubits are different, but the second and third are the same.

Just like in the classical setting, this "syndrome" tells us exactly which qubit flipped, allowing us to apply a corrective

In the following, we will see what we mean by parity measurements in this context and how to implement it in practice.

Before that we quickly mention how to switch from

Consider the logical state

- Verify that

(i.e., this state is in the eigenspace of ). - An

error occurs on qubit 2.

Computeand . - What is the syndrome? How do you correct the error?

and . Therefore . .

Sogives eigenvalue . . So gives eigenvalue .

- Syndrome:

.

From the syndrome table, this uniquely identifies an error on qubit 2.

We applyto correct.

The Phase-Flip Code

With quantum mechanics comes a new type of error: the phase-flip (

If we apply the same bit-flip code, it fails completely because a

To solve this, we rotate our basis.

By working in the dual basis

By measuring the stabilizers

This symmetry between

The Hadamard gate

If you can correct bit-flips, you can correct phase-flips simply by rotating your perspective by 90 degrees on the Bloch sphere.

- Show that a single

error on the state is undetectable by the stabilizer. - Show that the same

error is detected by the stabilizer.

Hint: Use the fact thatand .

-

and .

The corrupted state is.

In the computational basis,and .

Computingon this state: both and are superpositions of computational basis states where qubits 1 and 2 can be anything.

Thecheck measures computational-basis parity, which does not correlate with phase information.

Formally,, so commutes with and cannot be detected. -

(eigenvalue ).

So.

The eigenvalue flipped to, detecting the error.

This works because(they anti-commute).

Consider the state

- What happens if you measure

individually? What is the post-measurement state for each outcome? - What happens if you measure

jointly? What is the post-measurement state?

- Measuring

: - Outcome

(prob. 1/2): state collapses to . - Outcome

(prob. 1/2): state collapses to .

In both cases, the superposition is destroyed.

- Outcome

- Measuring

:

Both terms have eigenvalue, so the measurement returns with certainty.

The post-measurement state is still, completely unchanged.

Repetition Code Circuit Implementation

One Parity Measurement

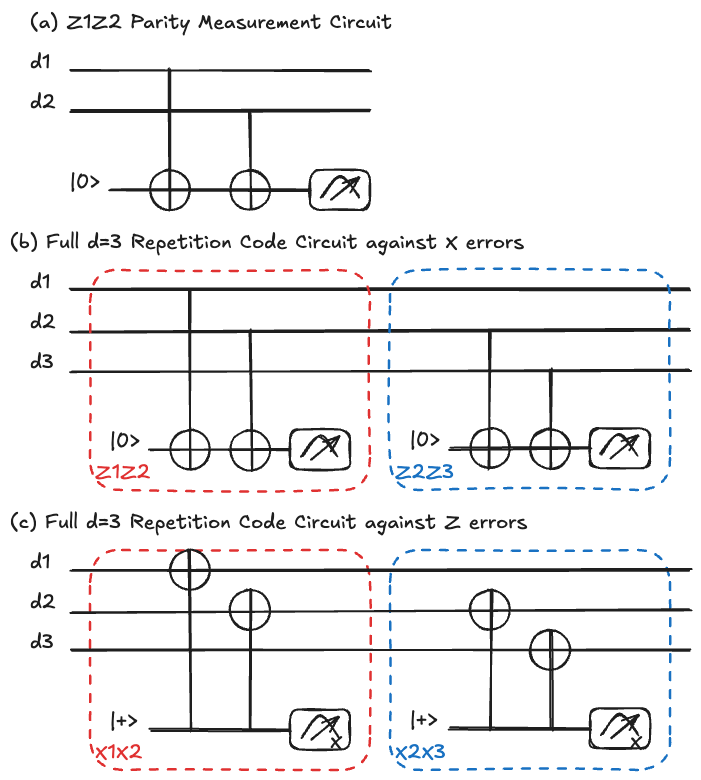

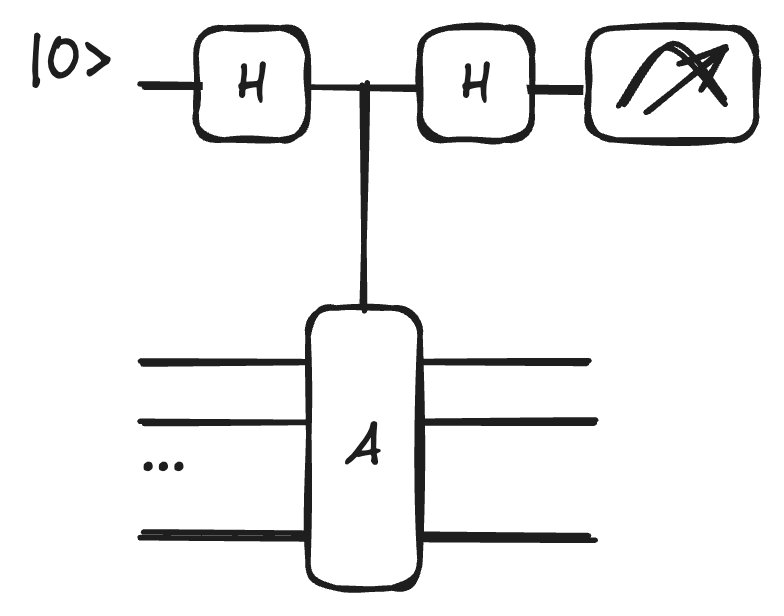

How do we actually measure the parity

We want to check the joint parity of the

Mathematically, this corresponds to the eigenvalue of the joint operator

To implement parity measurement, we introduce an ancilla qubit initialized in

By applying CNOT gates controlled by

If the data qubits are in the same state (both 0 or both 1), the ancilla remains

If they differ, the ancilla flips to

This allows us to "monitor" the state without learning the specific values of

The corresponding circuit is shown in Fig..

Consider the state

- Write the full 3-qubit state before any gates:

. - Apply CNOT

(qubit 1 controls, ancilla is target). Write the resulting state. - Apply CNOT

. Write the resulting state. - What does measuring the ancilla tell you? Does it reveal

or ?

- CNOT

: qubit 1 controls the ancilla flip.

(The firstdoesn't flip the ancilla; the first in flips .) - CNOT

: qubit 2 controls the ancilla flip.

(In, qubit 2 is , so the ancilla flips back: . Result: .)

Final state:. - The ancilla is

, meaning the parity is even (qubits agree).

The measurement reveals only the parity, notor .

The data state is undisturbed.

Full

If we want to protect a logical qubit more robustly, we come back to a 3-qubit system capable of monitoring states of the form

By using two ancilla qubits, we can perform twice the parity measurement describe above, once for each pair of qubits:

The measurement results of these two ancillas provide us with an "error syndrome."

This syndrome tells us exactly which qubit (if any) suffered a bit-flip error (

| Error | Action | ||

|---|---|---|---|

| Nothing | |||

| Flip |

|||

| Flip |

|||

| Flip |

The full syndrome extraction circuit is shown in Fig. (b).

Dealing with Phase Errors

Again, we quickly mention that phase-flip errors (

To monitor states of the form

By applying a basis change, we replace our

Consequently, the gates are swapped:

Moving Beyond Idealized Assumptions

Here we only gave examples of robustness to two simple error channels: Pauli

However, in previous lessons, we mentioned that we do not live in a "Pauli-only" world: experimental reality is much messier.

In a real device, we face a cocktail of decoherence:

- Correlated Pauli errors:

and happening simultaneously (effectively a error). - Leakage: The qubit leaves the computational subspace (

) and enters a higher state . - Atom Loss: In neutral atom or trapped ion setups, the physical carrier of the information might literally disappear from the trap.

QEC should in theory take into account all these different noise channels to model as precisely as possible what is happening in our hardware.

We are only going to focus on Pauli errors in this lecture, but adding more complex noise channels is the end goal of QEC and an active field of research.

To achieve robust error correction, having physical redundancy is not enough.

We will rely on two pillars of Fault Tolerance: Spatial Redundancy and also Temporal Redundancy.

- Spatial Redundancy involves increasing the number of physical qubits as we just saw for the repetition code. As we increase the code distance

, we increase the number of errors required to create a logical one. - Temporal Redundancy will involve repeating the syndrome measurements roughly

times. This makes the system robust against data errors but also measurement (ancilla) noise, ensuring that a single "bad read" doesn't lead to a false correction.

Imperfect Parity Measurements

Indeed, in reality, our measurement hardware is not perfect and can affect data qubits but ancilla qubits as well.

We must distinguish between a transient measurement error (where the ancilla gives the wrong result) and a persistent data error (where the qubit actually flipped).

If we measure once and see a flip, we don't know if the data is corrupted or if the measurement device just "lied" to us.

The solution is to repeat the measurement.

By measuring the parity measurement circuits shown in Fig. multiple times, we can use a majority vote on the syndromes (or more sophisticated decoding) to decide if an error has truly occurred.

By looking at the temporal pattern of the syndromes, we can isolate exactly where the fault occurred.

This idea is at the heart of Fault-Tolerant Quantum Computing 5.

- Absence of Error: Each measurement consistently reports

. - Data Error: A flip at time

will cause all future parity measurements to report a flip. - Measurement Error: This can be modeled as an

gate applied to the ancilla right before the measurement. It results in a single flipped result, while subsequent measurements return to "normal."

An ancilla measuring

- Is this pattern consistent with a data error? If so, when did it occur?

- Another ancilla produces the sequence

. Is this consistent with a measurement error? When? - Why does repeating the measurement help distinguish between these two cases?

-

Yes. A data error between rounds 2 and 3 would flip the parity permanently.

All subsequent measurements (rounds 3, 4, 5) report, consistent with a persistent data error occurring between rounds 2 and 3. -

Yes. A measurement error in round 3 would produce a single incorrect result.

The ancilla "lied" once, but the underlying parity didn't change, so rounds 4 and 5 return to. -

A data error produces a permanent change in the syndrome (all future rounds flip).

A measurement error produces a transient blip (only one round is affected).

By repeating, we can distinguish the two: a sustained change signals a real data error, while an isolated flip signals a measurement error.

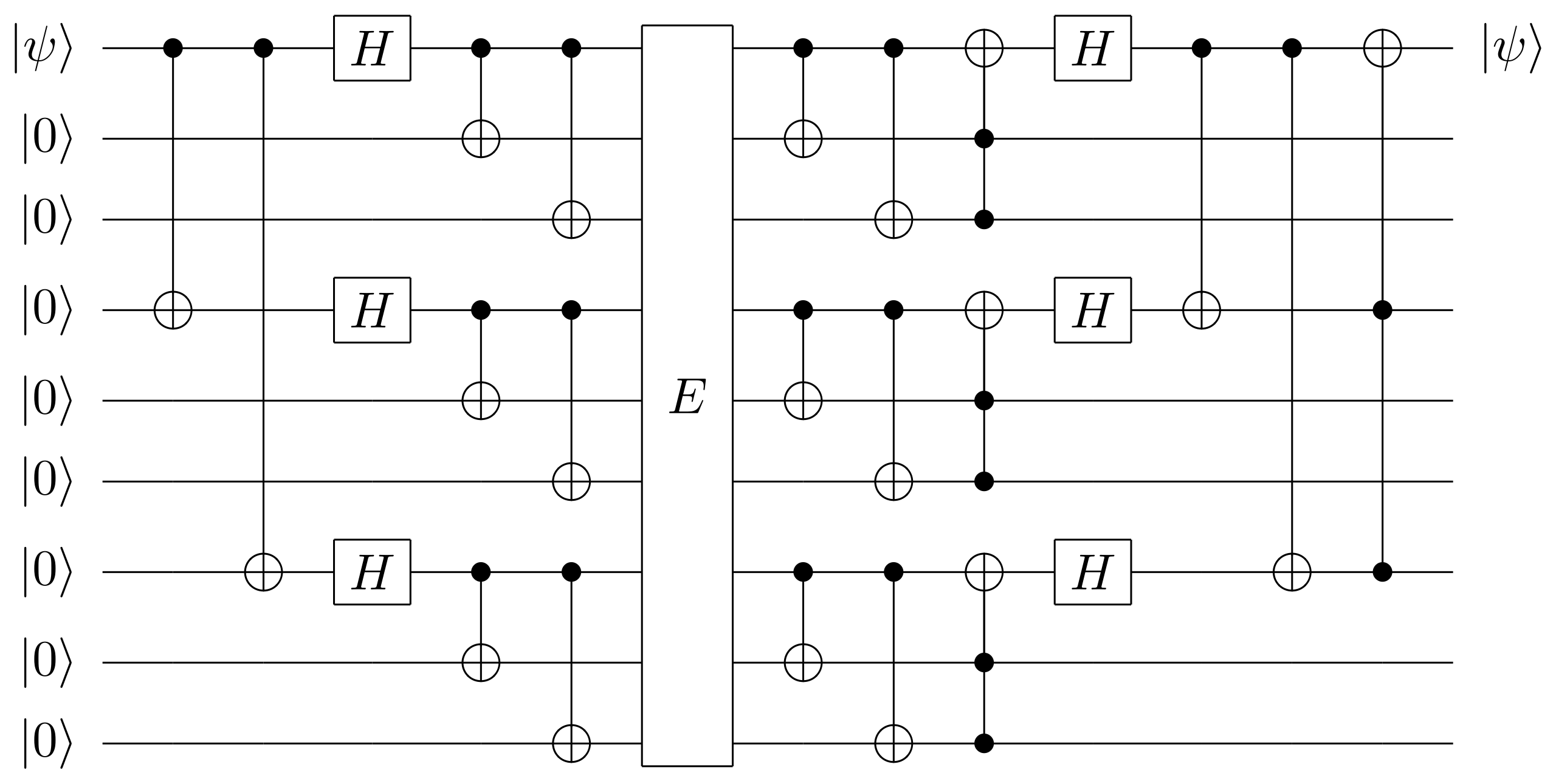

Shor Code: Nine Qubits for Arbitrary Error Protection

If we want to protect against any single-qubit error (bit-flip, phase-flip, or a combination of both), the repetition code we discuss is not enough.

We must use both two approaches together, in a concatenated manner.

Peter Shor proposed a 9-qubit code that nests the bit-flip code inside the phase-flip code 3.

Construction

The logical

Then, we expand each

This results in a 9-qubit state where the logical codewords are:

You can think of this as a two-level hierarchy.

The inner "blocks" (sets of 3 qubits) protect against bit-flips within each block.

The outer structure (the relative signs between blocks) protects against phase-flips.

Labelling them from 1 to 9, if a bit-flip occurs on qubit 2, the parity checks within the first block (

If a phase-flip occurs, it doesn't matter which specific qubit in the block was hit; the phase-flip affects the collective phase of the

Even though errors in nature are continuous (like a

We effectively digitize the noise.

Because any single-qubit error

The encoding circuit for the Shor code is shown in Fig..

Correction Protocol

The Shor code decouples the correction of different error types.

- Correcting

errors: We perform check operators and within each of the three blocks. This allows us to identify and flip back any single-qubit bit flip. - Correcting

errors: A phase flip on any qubit in a block flips the sign of that entire block (e.g., changes to ). To detect this, we measure the "logical" parity between blocks. Specifically, we compare the phase of Block 1 vs Block 2, and Block 2 vs Block 3.

To measure the phase of a block, we use the operator

Therefore, the stabilizers for phase-flip detection are the products of these operators across blocks, such as

| Stabilizer | Type | Function |

|---|---|---|

| Local |

Detects |

|

| Local |

Detects |

|

| Local |

Detects |

|

| Global |

Detects |

|

| Global |

Detects |

A

Suppose a

- What is the effect of

on the encoded state? Which stabilizers detect it? - What is the effect of

on the encoded state? Which stabilizers detect it? - Explain why the ability to correct

and separately implies the ability to correct .

-

is a bit-flip in Block 2.

The stabilizersand will both return (since qubit 5 is shared by both).

Syndrome:within Block 2, identifying qubit 5. -

is a phase-flip on a qubit in Block 2.

It flips the sign of the entire Block 2 superposition ().

The inter-block stabilizersand will both return . -

When we measure the syndrome, we first detect and correct the

part (qubit 5 in Block 2), then detect and correct the part (Block 2 phase flip).

Since, correcting both and on the same qubit also corrects .

The global phaseis unobservable and doesn't affect the logical state.

More formally, the syndrome measurement projects any continuous error onto the discrete Pauli basis, so only, , , or corrections are ever needed.

The Stabilizer Formalism

Manually tracking states with 9 qubits starts to be cumbersome, we can easily imagine how complex it becomes when dealing with tens of qubits.

We come back to the two examples we already mentioned, repetition codes and Shor's code, but now using the Stabilizer Formalism 6.

Instead of describing the state vector, we describe the group of operators

The central idea is to define a quantum state (or a subspace of states) not by writing out its full vector of amplitudes, but by describing the set of operators that leave that state unchanged.

This is an efficient way to handle quantum error-correcting codes.

A code is defined by a subgroup of the Pauli group, think

For a code using

In terms of protection, we distinguish:

- Detection: An error

is detectable if it anti-commutes with at least one stabilizer . - Correction: We measure the generators of

. The resulting eigenvalues ( ) form the syndrome that identifies the error.

The Intuition of Stabilizers

To get a feel for this, let's start by looking at the basic Pauli operators and their eigenstates.

We can characterize specific states by the operators they are "stabilized" by:

- The operator

stabilizes the state , since . - The operator

stabilizes the state , since . - The operator

stabilizes the state , since . - The operator

stabilizes the state , since .

In each case, we are identifying an operator and an associated eigenstate corresponding to the eigenvalue

The codespace is the simultaneous

If

- What is the stabilizer of the 2-qubit Bell state

?

Hint: Find all 2-qubit Pauli operatorssuch that . - How many independent generators does this stabilizer group have? Is this consistent with the formula

for qubits and logical qubits (since is a unique state)?

- We check all 2-qubit Pauli operators:

. So is a stabilizer. . So is a stabilizer.

The full stabilizer group is(note up to sign).

- Two independent generators:

and .

This givesgenerators, consistent with the formula.

The Bell state is a unique state (1D subspace =), which means encoded qubits.

The Bit-Flip Repetition Code in Stabilizer Language

We have already encountered the 3-qubit repetition code for bit-flips.

In this code, our logical states are

Instead of checking the qubits directly (which would collapse our superposition), we monitor the state using what we called "parity operators" used above, which happen to be the stabilizers.

For this specific code, the stabilizers are:

These operators allow us to monitor the states without destroying the encoded information.

By using two stabilizers, we define a 2-dimensional subspace (a logical qubit) within a larger 8-dimensional Hilbert space (

If we have

Each independent stabilizer divides the available dimension by 2.

For 3 qubits (

- 1 stabilizer (

) restricts us to a dimensional subspace. - 2 stabilizers (

) restrict us to a dimensional subspace.

This leaves us with a 2-dimensional subspace, which is exactly what we need to encode a single logical qubit.

- The Shor code uses

physical qubits to encode logical qubit. How many independent stabilizer generators does it have? - List all stabilizer generators of the Shor code.

-

independent stabilizer generators. -

The 8 generators are:

, , , , , (6 local checks),

, (2 global checks).

Detecting Errors with Stabilizers

Detecting errors boils down to checking if the stabilizer sign has flipped from

An error that anti-commutes with our stabilizer will flip its eigenvalue.

We mention again the concept of error syndrome, now within the scope of the stabilizer formalism.

Consider the state

If we measure the stabilizer

However, if a bit-flip error occurs on the second qubit, represented by the operator

Now, if we check our stabilizer

The eigenvalue has changed to

This negative sign is our error syndrome, telling us precisely that something has gone wrong between qubits 1 and 2.

The detection rule is simple: if error

If they commute (

This is why we need multiple stabilizers: each one is a "detector" sensitive to a different subset of errors.

For the 3-qubit bit-flip code with stabilizers

- Compute the commutator

. Does detect ? - Compute the commutator

. Does detect ? - Explain why the bit-flip code cannot detect

errors.

-

.

So(they anti-commute). Yes, detects . -

.

. So (they commute).

No,does not detect . -

Both

and commute with any single error, since commutes with all operators.

Therefore, noerror produces a syndrome flip, and the bit-flip code is completely blind to phase errors.

How to Measure a Stabilizer

We already saw the stabilizer measurement circuit without knowing it!

For the repetition code, it is exactly the circuits of Fig., using controlled-unitary operations.

More generally, if we want to measure any stabilizer

- Initialize an ancilla in

and apply a Hadamard gate to put it in . - Perform a controlled-

operation where the ancilla is the control and the codespace is the target. - Apply another Hadamard to the ancilla and measure it.

This should remind you of phase kickback mechanisms, that we discussed in the context of Grover's algorithm and phase estimation!

This construction is depicted in Fig..

The joint state of the ancilla and the data

Applying the second Hadamard yields:

Measuring the ancilla in the

If the state was already a

- Suppose

is a eigenstate of : .

Trace through the circuit above and show that the ancilla measurement always returns. - Now suppose an error has occurred and

is a eigenstate: .

Show that the ancilla measurement always returns. - In the case of the 3-qubit code with

, what specific gates replace the "controlled- " box?

-

If

, then after the controlled- :

After the second Hadamard:. Measurement always gives . -

If

, then after the controlled- :

After the second Hadamard:. Measurement always gives . -

The controlled-

can be decomposed as two controlled- gates (or equivalently, two CNOT gates from data qubits to the ancilla, since CNOT implements a controlled- which, combined with Hadamards on the ancilla, effectively measures ).

In practice, for-type stabilizers, we skip the Hadamards on the ancilla and just use two CNOTs from data qubits 1 and 2 to the ancilla.

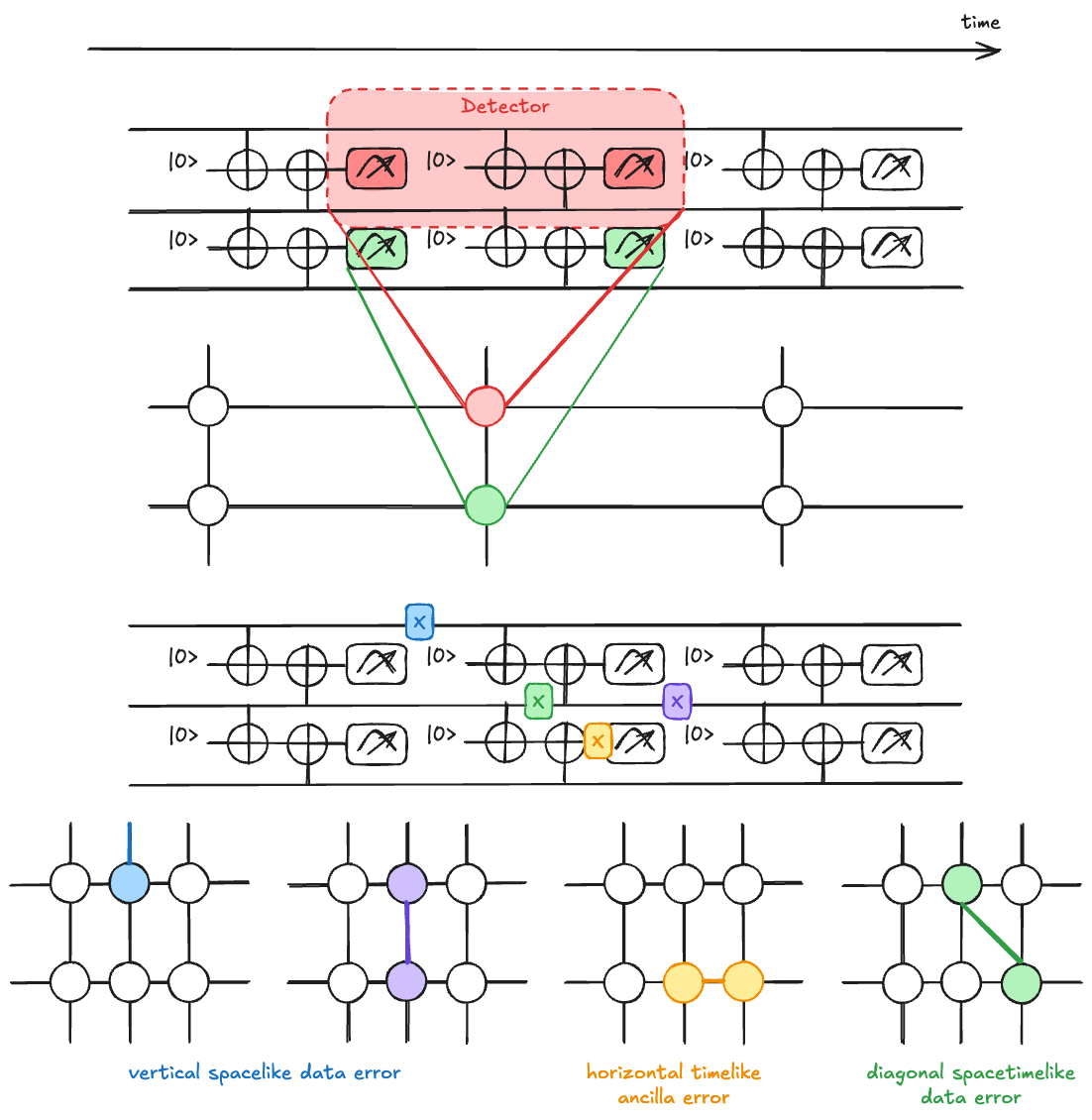

Detectors and Decoding

Now that we have seen the full circuit of stabilizer measurements via repeated syndrome extraction, what should we do with all the ancilla bitstring?

How to recover the potentially corrupted encoded data state?

Before taking the example of the surface code, we discuss the decoding part by revisiting the repetition code with a focus on detectors and decoding.

The Logic of Sequential Measurements

In the bit-flip repetition code, we are interested in repeated parity

As we already mentioned, we don't measure the state of the data qubits directly, as this would collapse the superposition we are trying to protect, but rather the relative parity between neighbors.

When we look at the results of these measurements, we are looking for consistency.

If we have a sequence of measurements, any change in the parity result over time or space signals that an error has occurred.

We define a Detector as a specific set of measurements that, in the absence of any errors, has a deterministic expected parity (usually even).

A Detector Event occurs when a detector returns an "unexpected parity."

It signals that an error has entered the system.

Crucially, a single measurement result in isolation tells us nothing; it is only the relationship between sequential measurements that allows us to track errors.

The intuition is that every sequential pair of measurements forms a detector.

It isn't the specific value of the individual measurement that carries the information, but rather the change between them.

For instance, if a measurement

In practice, here is an example a raw ancilla measurement sequence and its associated detection signal:

| Ancilla Measurement Sequence | Detection Signal |

|---|---|

The detection signal is essentially the XOR of consecutive bits.

A "1" in the detection signal indicates a detector event, a change in parity.

This is the first step toward building a "syndrome" that we can use for decoding.

The Error Detection Graph

To make sense of these detector events across many qubits (space) and many rounds of measurement (time), we map the problem onto a Spacetime Graph.

In this representation, we can visualize how physical errors (the "causes") manifest as detector events (the "symptoms").

- Nodes represent potential detector events (the comparison between two measurements).

- Edges represent the physical errors that "link" two detector events together.

An edge connects two nodes if a single physical error triggers exactly those two detector events.

For example, a bit-flip on a data qubit between two measurement rounds will only trigger the detectors that share that qubit, since after this bit-flip, future detectors will not see any new change.

A measurement error on an ancilla, however, might only trigger a "timelike" edge between two consecutive rounds of measurement at the same location.

An example of such a detection graphs is shown in Fig..

Inferring Errors through Matching

When we actually run an experiment, we obtain a pattern of measurement results.

We then identify the nodes where the parity was unexpected.

Our goal is to find the most likely set of physical errors (edges) that could have produced this specific pattern of nodes.

This is fundamentally a graph problem.

If we see only two detector events, we can "match" them easily with an edge.

If we have a complex web of events, we use algorithms like Minimum Weight Perfect Matching (MWPM) to infer the most probable error chain.

This mapping of quantum errors to graphs is what makes error correction scalable 7.

Consider a distance-

The detection signal for ancilla 1 (measuring

The detection signal for ancilla 2 (measuring

- At which time step did the detector events occur for each ancilla?

- Is this pattern consistent with a single data error? If so, on which qubit and at what time?

- Is this pattern also consistent with two measurement errors? Explain.

- Detector events (where the detection signal = 1):

- Ancilla 1: events at times

and (XOR flipped between rounds 1-2 and 2-3). - Ancilla 2: events at times

and .

- Ancilla 1: events at times

- Detection signal

means: no change between rounds 0-1, change between 1-2, change between 2-3, no change between 3-4.

This is consistent with a data error on qubit 2 occurring between rounds 1 and 2 (first detection at round 2), followed by another event between rounds 2 and 3. - Yes. Two measurement errors, one at round 2 and one at round 3 for both ancillas, could produce the same pattern.

This ambiguity is why MWPM decoding is needed: it finds the most probable explanation given the error rates.

Now that we are familiar with the stabilizer formalism and the decoding problem and having built intuition from the repetition code, we discuss the surface code, probably the most well studied QEC code.

Surface Code

Limitations of the Shor Code

The Shor Code showed we could protect against both

However, the Shor code has a distinct hierarchy: we have local

The disadvantage is the "non-locality" of the

In a physical architecture, requiring a 6-qubit joint measurement (

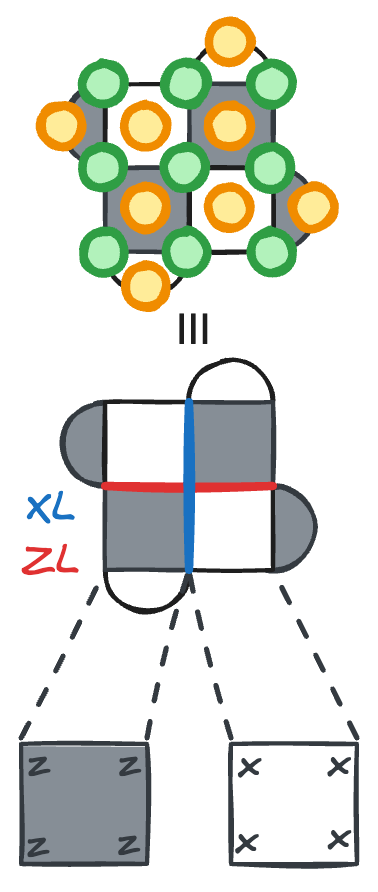

The Surface Code

The Surface Code takes a different approach.

Instead of mixing local and global checks, we make everything local.

By arranging qubits on a 2D lattice, we can perform all parity checks using only nearest-neighbor interactions involving 4 data qubits.

In the surface code, we define two types of plaquettes:

-plaquettes (darker regions) which measure the parity of operators on 4 surrounding data qubits to detect errors. -plaquettes (lighter regions) which measure the parity of operators on 4 surrounding data qubits to detect errors.

The logical codespace (

The lattice structure is illustrated in Fig..

Locality means each stabilizer measurement only involves nearest-neighbor qubits on a 2D layout.

Long-range interactions are either impossible (superconducting chip) or error-prone (atom movement) on most platforms, so this matters a lot in practice.

The surface code gives the same protection as the Shor code but with only local operations, which is why it is the leading candidate for fault-tolerant quantum computing as the numerous experimental implementations show.

The 2D layout and the small weight of the stabilizers are the reasons why the surface code has been well studied.

Another reason that we will discuss soon is its high accuracy threshold: we can tolerate a lot of physical error probability per physical gate before the QEC protection stops working.

Constructing the Logical State

How to write the

We will see that it is not as straightforward as the

To construct the logical

If we start from

Each projector creates a superposition:

where

As we apply successive stabilizer projectors, the state branches further (twice for each stabilizer), spreading entanglement across the surface.

The resulting state is a highly entangled superposition:

Where

The Efficiency of the Stabilizer Formalism

When we look at a lattice of

The stabilizer formalism avoids this entirely.

Instead of an exponential description of the state, we rely on a linear description via the stabilizers.

This is what makes such codes tractable despite the exponential size of the many-body Hilbert space.

Defining the Logical Operators

To actually use our code for computation, we need to define our logical space.

Since we are dealing with a stabilizer code, we will not define the code from its logical states

Specifically, we want to find a logical

From a physical perspective,

It cannot be just any string; it must commute with all the stabilizers in our stabilizer group

Stabilizers are "blind" to the full codespace.

If a stabilizer measurement could distinguish between states within the codespace, it would necessarily collapse the logical state.

Therefore, any logical operator, which by definition moves us from one point in the codespace to another, must commute with all stabilizers to ensure it doesn't leave the "protected" subspace or trigger an error syndrome.

Any chain of physical

These different "versions" of

Logical

Similarly, we define the logical

For example, in a small lattice:

Just as physical

This topological "intersection number" is the deep reason why the code works as a single logical unit.

The code distance

In this picture, quantum information is not stored at specific locations but in the global, non-local properties of the entire lattice 8.

Consider a distance-

- How many data qubits,

-stabilizers, and -stabilizers does the code have? - Verify the

parameters using . - Give an example of a weight-3 logical

operator (a string of 3 operators crossing the lattice). - Why can't a weight-2 string of

operators be a logical operator?

- A distance-3 surface code has

data qubits (on a rotated lattice), 4 -stabilizers, and 4 -stabilizers, for a total of 8 stabilizers. logical qubit. .

So the code is. - A horizontal string of

along one column of the lattice (connecting top boundary to bottom boundary if runs vertically in your convention). - A weight-2 string would not span the full lattice.

It would either be equivalent to a stabilizer (if it forms a closed loop) or anti-commute with some stabilizer (if it's a partial string).

Neither case gives a valid logical operator.

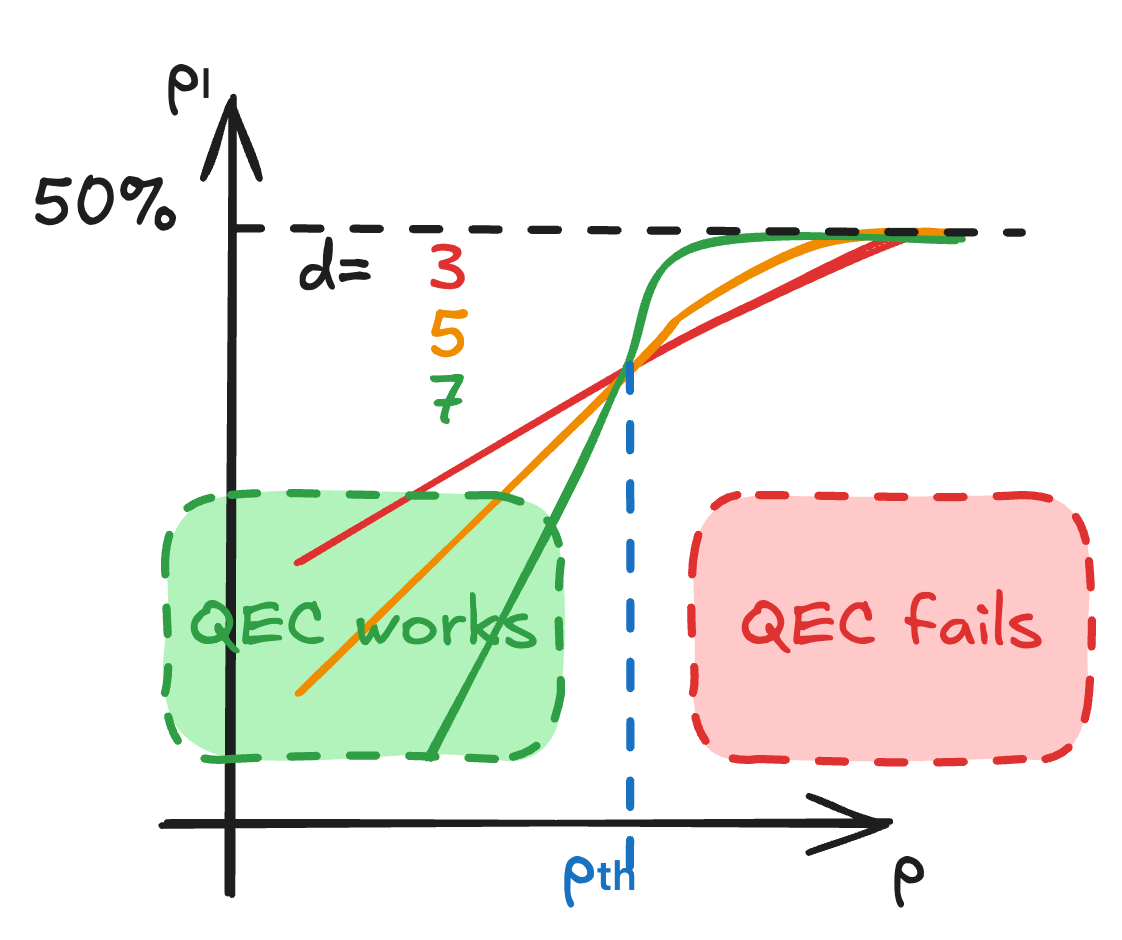

The Threshold Theorem and Scaling

We briefly mentioned the high accuracy threshold of the surface code as being one of the nice features of this code.

Now it's time to be more precise on this specific point.

Classes of Errors

First, when we analyze how errors propagate and are caught in a QEC code like the surface code, we saw that we categorize them based on their orientation in the (2+1)D spacetime volume (the "+1" standing for time), just as we did above in the Decoding part:

- Horizontal matching corresponds to time-like errors: syndrome measurement failures. A flip in the measurement outcome appears as two syndrome changes separated in time.

- Vertical matching represents space-like errors: the "classic" data qubit errors (Pauli

or ) that occur on the physical qubits between measurement cycles. - Diagonal matching represents spacetime errors: often arising from errors during the entangling gates (like a CNOT) of the stabilizer measurement circuit itself. A single failure in a CNOT can propagate to both the data qubit and the ancilla, creating a correlated error that is diagonal in our decoding graph.

The Threshold

To visualize if our error correction is actually helping, we use a Threshold Plot.

This plot compares the physical error rate

As we increase the code distance

We can model the logical error rate as:

- When

: We are in the "protection" regime. More is better. Increasing the distance suppresses the logical error rate exponentially. - When

: We are in the "noise-dominated" regime. More is worse. Adding more qubits just adds more opportunities for errors to occur, and the complex decoding can no longer keep up. The logical error rate actually increases with , eventually saturating at , which represents total loss of information (random noise).

This behavior is shown in the threshold plot Fig..

The abscisse of the "crossing point" on the graph is the threshold

For the surface code under circuit-level noise, this is often cited around

But be aware that this value varies significantly depending on the noise model of the gates, the circuit implementation and the decoder used.

Current superconducting and neutral atom platforms are operating near or just below this threshold.

Using the approximate formula

- For

and , compute . - For

and , compute . - For

and , compute . - By what factor does

decrease when going from to ? What about to ?

With

-

-

-

-

From

to : factor of improvement.

Fromto : factor of improvement.

Each increment of 2 in distance gives another order of magnitude in error suppression (when).

Circuit Implementation and Fault-Tolerant Design

When we move from the theoretical framework of the surface code to its physical implementation, we must consider the specific gates that realize our stabilizers.

A

An

To measure these stabilizers without destroying the quantum information in the data qubits, we introduce an ancilla qubit, just like for the repetition code.

The circuit involves a sequence of four CNOT gates (compared to 2 for the repetition code) between the ancilla and the data qubits.

But we cannot apply these gates in any arbitrary order.

The sequence of CNOTs matters for fault tolerance because of how errors propagate through the circuit.

The Intuition of Error Propagation

Again, our goal is to detect errors in the data by periodically checking the stabilizers via the ancilla.

But the ancilla itself is a physical qubit prone to noise.

If a single error occurs on the ancilla during the measurement sequence and propagates to multiple data qubits, we risk creating a high-weight error from a single fault.

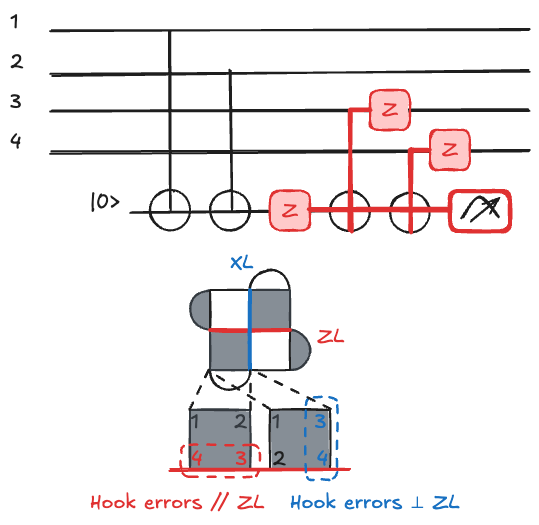

Consider the "Hook error."

If a single

In a four-qubit plaquette, an error after the second CNOT could result in a

A single fault on an ancilla that propagates to two or more data qubits is often called a hook error.

In the surface code, if these errors align poorly, they can effectively reduce the code distance.

Gate Ordering

The danger arises when propagated errors align with our logical operators.

For a distance-3 surface code, one hook error could lead to 2 physical data errors, hence a logical one.

On a distance-5 code, if two hook errors occur due to unlucky CNOT ordering, they could lead to 4 data errors that form a chain across the lattice, potentially also resulting in a logical error.

To prevent this, we follow a specific design rule: the last two qubits touched by a

By orienting the "stretch" of the hook error so it doesn't bridge the gap between boundaries, we ensure that the error weight remains manageable (aka does not increase) and doesn't lead to a premature logical failure.

One solution is to use a specific CNOT ordering, often a "Z-shape" or "N-shape" on qubits in the plaquette.

Specific orderings ensure that the "last 2 qubits touched" rule is preserved, as shown in Fig..

This specific geometric solution for gate ordering is not unique, but it is mandatory for maintaining the threshold of the surface code.

The specific mapping differs for architectures like Neutral Atoms due to different connectivity and constraints.

Consider a

- If an

error occurs on the ancilla between the 2nd and 3rd CNOT, which data qubits are affected? What is the resulting data error? - If instead the CNOT order is 1, 3, 2, 4, and the same

error occurs between the 2nd and 3rd CNOT, which data qubits are affected? - Explain why the second ordering is better for fault tolerance in the surface code.

-

With ordering 1, 2, 3, 4: the

error after the 2nd CNOT propagates via the 3rd and 4th CNOTs.

Each CNOT (ancilla as control formeasurement) propagates errors from ancilla to data.

The data qubits 3 and 4 receiveerrors (via the CNOT propagation rule).

Resulting error:on the data, a weight-2 error. -

With ordering 1, 3, 2, 4: after the 2nd CNOT (which targets qubit 3), the

error propagates via the 3rd CNOT (targeting qubit 2) and 4th CNOT (targeting qubit 4).

Resulting error:on the data. -

In the surface code, qubits 3 and 4 in ordering (a) are adjacent and their

error aligns with the logical string direction, potentially bridging boundaries.

In ordering (b),are not adjacent along the logical string direction; they stretch perpendicular to .

A perpendicular error is less dangerous because it doesn't contribute to shortening the effective code distance along the logical operator direction.

The Surface Code Detection Graph

To decode errors on the Surface code, we move from a 2D physical layout to a 3D Detection Graph.

While a 1D repetition code allows us to visualize errors on a line, the surface code requires a 2D plane of qubits.

When we add the dimension of time (the repeated measurement rounds), we get a 3D spacetime volume.

Nothing differs from the repetition code: in this graph, "nodes" still represent stabilizer measurement events, and "edges" represent potential errors (either physical qubit flips or measurement bit-flips).

If a stabilizer changes its value between round

The job of the decoder is then to pair these defects in a way that most likely explains the underlying physical noise.

Implementing Logical Gates

Logical Initialization and Measurement

We briefly mention two important logical operations: initialization and measurement.

We begin with logical initialization, where we prepare the code in a specific state.

For the surface code, we typically initialize the logical state

Indeed, projecting (via measuring) the

Conversely, to prepare the logical

Logical measurement in the

We then aggregate these results to determine the parity of the logical operator.

Similarly, measurement in the

The Surface Code Memory Experiment

The "Memory Experiment" is the basic test of whether a quantum error-correcting code actually works, relying simply on logical initialization, repeated stabilizer measurement and logical measurement.

The goal is simple: can we keep a logical qubit alive longer than its constituent physical parts?

The procedure follows a specific cadence.

First, we initialize the logical state

Then, we enter a "syndrome extraction" phase consisting of repeated rounds of stabilizer measurements.

In each round, we measure the parity of

We don't just measure once.

Because our measurements themselves are noisy, we must repeat these rounds

Finally, we perform a logical

By comparing the initial state, the history of measurement syndromes, and the final readout, we can determine if the logical information remained intact.

Storing a logical qubit via a memory experiment is not enough though, we also need to manipulate it.

Some manipulations are easier than others: for the surface code, the logical CNOT and the Hadamard are transversal (modulo some hidden details), it means that a logical gate is applied by simply performing the same gate on every physical qubit.

However, the Eastin-Knill theorem tells us that no single code can implement a universal gate set transversally.

How to get a non-Clifford gate then?

We rely on quantum teleportation.

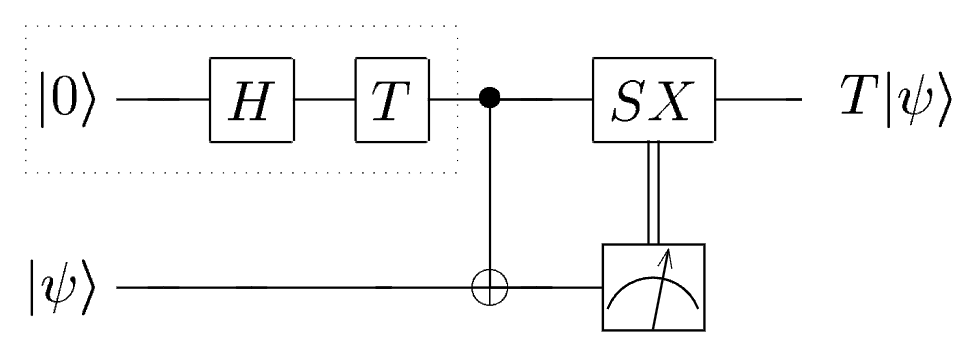

Non-Clifford Gates: T via Teleportation

The most difficult gate to implement fault-tolerantly is the

Since we cannot perform it transversally on the surface code without destroying the code's protection, we use Gate Teleportation.

The idea is to "pre-bake" the non-Cliffordness into a special resource state, called a Magic State

We then use only Clifford operations (which are "easy" and fault-tolerant) to consume this magic state and teleport the

The circuit logic backpropagate a

If we measure the resource qubit and get a "1" outcome, we must apply a corrective

Then, the process of Magic State Distillation allows us to convert many of such noisy non-Clifford states into one highly pure state, which we then inject into our computation using the circuit shown in Fig..

The magic state is

- Show that

is not a stabilizer state (i.e., it cannot be the eigenstate of any single Pauli operator). - Compute

, , and . Where does sit on the Bloch sphere? - Why is it significant that the

gate cannot be implemented transversally? What does the Eastin-Knill theorem say?

- A stabilizer state must lie on an axis of the Bloch sphere (

, , or ).

has a phase of on the component, placing it at a angle between the and axes on the equator.

It is not an eigenstate of, , or , so it is not a stabilizer state.

.

Similarlyand .

The state sits on the equator of the Bloch sphere at anglebetween the and axes. - The Eastin-Knill theorem states that no quantum error-correcting code can implement a universal gate set entirely through transversal gates.

Since Clifford gates alone are not universal (they can be efficiently classically simulated by the Gottesman-Knill theorem), we need at least one non-Clifford gate () for universality.

Thegate must therefore be implemented via non-transversal methods like magic state injection and distillation.

A distance-

- How many physical qubits are needed for

? - If we need a logical error rate of

(required for useful quantum computation), and the physical error rate is with threshold , estimate the required distance using . - How many physical qubits does this require for a single logical qubit? For 100 logical qubits?

- Physical qubits

: qubits : qubits : qubits : qubits : qubits

- We need

.

, so , giving .

We need(rounding to the nearest odd number). - For

: physical qubits per logical qubit.

For 100 logical qubits:physical qubits.

This does not include the overhead for magic state distillation factories, which can increase the total by a lot.

Experimental Implementations of QEC

We end these lecture notes by some "preview material" that we will be covered in detail during next week's lecture, connecting the theoretical framework above to the state of experimental QEC.

It is based on the review 9 and the associated database accessible at github.com/francois-marie/awesome-quantum-computing-experiments.

Platforms and Codes

Experimental efforts have explored a variety of QEC codes across multiple hardware platforms.

The platforms considered include:

- Superconducting Circuits (Transmons, Fluxoniums) 10

- Trapped Ions 11

- Neutral Atoms (in optical tweezers) 12

- Photonic Systems (linear optics, integrated photonics)

- NV Centers in Diamond 13

- Nuclear Magnetic Resonance (NMR, for historical reasons)

The codes implemented experimentally range from simple repetition codes to topological codes:

- Repetition Codes (distance

, for bit-flip or phase-flip) 14, ?, ? - Four-qubit codes (

or ) 15, ? Perfect Code 16 - Steane

Code - Surface Codes (various distances) 17, ?, ?

- Color Codes 18, ?

- Bacon-Shor Codes 19

Timeline

The field has progressed through several milestones:

- First QEC demonstrations (1998-2011): Early NMR and trapped ion experiments demonstrated basic 3-qubit codes 14, ?, ?.

- Scaling to larger codes (2014-2021): Superconducting circuits began demonstrating repeated error correction and exponential error suppression with code distance 20, ?.

- Below-threshold operation (2022-2024): Google demonstrated that increasing code distance from

to to reduces logical error rates, crossing the break-even point 17, ?. - Logical processors (2024-present): Neutral atom platforms demonstrated 48 logical qubits with color codes 21, and superconducting platforms demonstrated logical computation 22.

Multiple platforms have now demonstrated below-threshold operation for the repetition code and small surface codes.

The next step is below-threshold operation for the full surface code at increasing distances, and performing useful logical computations with encoded qubits.

This requires improvements in qubit quality, qubit count, and real-time decoding speed.

We will discuss these experimental results in depth during next week's lecture.

Conclusion

We started this lecture by seeing that classical error correction protects information through redundancy and majority voting.

The Hamming code does this efficiently, with overhead growing only logarithmically.

In the quantum setting, two constraints change everything: the No-Cloning Theorem forbids copying unknown states, and measurement back-action means we cannot inspect qubits without disturbing them.

These constraints force us to use parity measurements and entanglement instead.

The 3-qubit repetition codes showed the central trick: measure parity rather than individual qubits just like for the Hamming code, and you can extract error information without collapsing the logical state.

The Shor code extended this to protect against all single-qubit errors simultaneously, and showed that continuous errors are effectively digitized by syndrome measurement.

The stabilizer formalism gave us a compact language to describe codes: instead of tracking exponentially many amplitudes, we track a linear number of operators.

This led to the surface code, where all stabilizers are local.

We discussed the threshold theorem (error correction works as long as physical error rates are below a critical value) and fault-tolerant circuit design (careful gate ordering prevents single faults from cascading into logical errors).

We also saw how logical gates are implemented, with Clifford gates being straightforward and the

In essence, without quantum error correction, the algorithms from our previous lectures (Shor, Grover, QPE) remain theoretical.

QEC is what bridges the gap and connects the noisy physical qubits from our platform lectures to the logical qubits that algorithms need.

Next week, we look at how different platforms implement these codes in practice.

References

- 1.

- Acharya, R; Abanin, D; Aghababaie-Beni, L; Aleiner, I; Andersen, T; Ansmann, M; Arute, F; Arya, K; Asfaw, A; Astrakhantsev, N; Atalaya, J; Babbush, R; Bacon, D; Ballard, B; Bardin, J; Bausch, J; Bengtsson, A; Bilmes, A; Blackwell, S; Boixo, S; Bortoli, G; Bourassa, A; Bovaird, J; Brill, L; Broughton, M; Browne, D; Buchea, B; Buckley, B; Buell, D; Burger, T; Burkett, B; Bushnell, N; Cabrera, A; Campero, J; Chang, H; Chen, Y; Chen, Z; Chiaro, B; Chik, D; Chou, C; Claes, J; Cleland, A; Cogan, J; Collins, R; Conner, P; Courtney, W; Crook, A; Curtin, B; Das, S; Davies, A; De Lorenzo, L; Debroy, D; Demura, S; Devoret, M; Di Paolo, A; Donohoe, P; Drozdov, I; Dunsworth, A; Earle, C; Edlich, T; Eickbusch, A; Elbag, A; Elzouka, M; Erickson, C; Faoro, L; Farhi, E; Ferreira, V; Burgos, L; Forati, E; Fowler, A; Foxen, B; Ganjam, S; Garcia, G; Gasca, R; Genois, É; Giang, W; Gidney, C; Gilboa, D; Gosula, R; Dau, A; Graumann, D; Greene, A; Gross, J; Habegger, S; Hall, J; Hamilton, M; Hansen, M; Harrigan, M; Harrington, S; Heras, F; Heslin, S; Heu, P; Higgott, O; Hill, G; Hilton, J; Holland, G; Hong, S; Huang, H; Huff, A; Huggins, W; Ioffe, L; Isakov, S; Iveland, J; Jeffrey, E; Jiang, Z; Jones, C; Jordan, S; Joshi, C; Juhas, P; Kafri, D; Kang, H; Karamlou, A; Kechedzhi, K; Kelly, J; Khaire, T; Khattar, T; Khezri, M; Kim, S; Klimov, P; Klots, A; Kobrin, B; Kohli, P; Korotkov, A; Kostritsa, F; Kothari, R; Kozlovskii, B; Kreikebaum, J; Kurilovich, V; Lacroix, N; Landhuis, D; Lange-Dei, T; Langley, B; Laptev, P; Lau, K; Le Guevel, L; Ledford, J; Lee, J; Lee, K; Lensky, Y; Leon, S; Lester, B; Li, W; Li, Y; Lill, A; Liu, W; Livingston, W; Locharla, A; Lucero, E; Lundahl, D; Lunt, A; Madhuk, S; Malone, F; Maloney, A; Mandrà, S; Manyika, J; Martin, L; Martin, O; Martin, S; Maxfield, C; McClean, J; McEwen, M; Meeks, S; Megrant, A; Mi, X; Miao, K; Mieszala, A; Molavi, R; Molina, S; Montazeri, S; Morvan, A; Movassagh, R; Mruczkiewicz, W; Naaman, O; Neeley, M; Neill, C; Nersisyan, A; Neven, H; Newman, M; Ng, J; Nguyen, A; Nguyen, M; Ni, C; Niu, M; O’Brien, T; Oliver, W; Opremcak, A; Ottosson, K; Petukhov, A; Pizzuto, A; Platt, J; Potter, R; Pritchard, O; Pryadko, L; Quintana, C; Ramachandran, G; Reagor, M; Redding, J; Rhodes, D; Roberts, G; Rosenberg, E; Rosenfeld, E; Roushan, P; Rubin, N; Saei, N; Sank, D; Sankaragomathi, K; Satzinger, K; Schurkus, H; Schuster, C; Senior, A; Shearn, M; Shorter, A; Shutty, N; Shvarts, V; Singh, S; Sivak, V; Skruzny, J; Small, S; Smelyanskiy, V; Smith, W; Somma, R; Springer, S; Sterling, G; Strain, D; Suchard, J; Szasz, A; Sztein, A; Thor, D; Torres, A; Torunbalci, M; Vaishnav, A; Vargas, J; Vdovichev, S; Vidal, G; Villalonga, B; Heidweiller, C; Waltman, S; Wang, S; Ware, B; Weber, K; Weidel, T; White, T; Wong, K; Woo, B; Xing, C; Yao, Z; Yeh, P; Ying, B; Yoo, J; Yosri, N; Young, G; Zalcman, A; Zhang, Y; Zhu, N; Zobrist, N . Quantum error correction below the surface code threshold. Nature 638 (8052) , 920–926 (2025) . doi:10.1038/s41586-024-08449-y

- 2.

- Chen, Z; Satzinger, K; Atalaya, J; Korotkov, A; Dunsworth, A; Sank, D; Quintana, C; McEwen, M; Barends, R; Klimov, P; Hong, S; Jones, C; Petukhov, A; Kafri, D; Demura, S; Burkett, B; Gidney, C; Fowler, A; Paler, A; Putterman, H; Aleiner, I; Arute, F; Arya, K; Babbush, R; Bardin, J; Bengtsson, A; Bourassa, A; Broughton, M; Buckley, B; Buell, D; Bushnell, N; Chiaro, B; Collins, R; Courtney, W; Derk, A; Eppens, D; Erickson, C; Farhi, E; Foxen, B; Giustina, M; Greene, A; Gross, J; Harrigan, M; Harrington, S; Hilton, J; Ho, A; Huang, T; Huggins, W; Ioffe, L; Isakov, S; Jeffrey, E; Jiang, Z; Kechedzhi, K; Kim, S; Kitaev, A; Kostritsa, F; Landhuis, D; Laptev, P; Lucero, E; Martin, O; McClean, J; McCourt, T; Mi, X; Miao, K; Mohseni, M; Montazeri, S; Mruczkiewicz, W; Mutus, J; Naaman, O; Neeley, M; Neill, C; Newman, M; Niu, M; O’Brien, T; Opremcak, A; Ostby, E; Pató, B; Redd, N; Roushan, P; Rubin, N; Shvarts, V; Strain, D; Szalay, M; Trevithick, M; Villalonga, B; White, T; Yao, Z; Yeh, P; Yoo, J; Zalcman, A; Neven, H; Boixo, S; Smelyanskiy, V; Chen, Y; Megrant, A; Kelly, J . Exponential suppression of bit or phase errors with cyclic error correction. Nature 595 (7867) , 383–387 (2021) . doi:10.1038/s41586-021-03588-y

- 3.

- Shor, P . Scheme for reducing decoherence in quantum computer memory. Physical Review A 52 (4) , R2493–R2496 (1995) . doi:10.1103/PhysRevA.52.R2493

- 4.

- Nielsen, M; Chuang, I . Quantum Computation and Quantum Information 10th Anniversary Edition. (2000) . doi:10.1017/CBO9780511976667

- 5.

- Terhal, B . Quantum Error Correction for Quantum Memories. Reviews of Modern Physics 87 (2) , 307–346 (2015) . doi:10.1103/RevModPhys.87.307

- 6.

- Gottesman, D . Stabilizer Codes and Quantum Error Correction. (1997) . doi:10.7907/rzr7-dt72

- 7.

- Fowler, A; Mariantoni, M; Martinis, J; Cleland, A . Surface codes: Towards practical large-scale quantum computation. (2012) . doi:10.48550/arXiv.1208.0928

- 8.

- Kitaev, A . Fault-tolerant quantum computation by anyons. Annals of Physics 303 (1) , 2–30 (2003) . doi:https://doi.org/10.1016/S0003-4916(02)00018-0

- 9.

- Le Régent, F . Awesome Quantum Computing Experiments: Benchmarking Experimental Progress Towards Fault-Tolerant Quantum Computation. (2025) . doi:10.48550/arXiv.2507.03678

- 10.

- Kjaergaard, M; Schwartz, M; Braumüller, J; Krantz, P; Wang, J; Gustavsson, S; Oliver, W . Superconducting Qubits: Current State of Play. Annual Review of Condensed Matter Physics 11 (1) , 369–395 (2020) . doi:10.1146/annurev-conmatphys-031119-050605

- 11.

- Bruzewicz, C; Chiaverini, J; McConnell, R; Sage, J . Trapped-Ion Quantum Computing: Progress and Challenges. Applied Physics Reviews 6 (2) , 021314 (2019) . doi:10.1063/1.5088164

- 12.

- Wintersperger, K; Dommert, F; Ehmer, T; Hoursanov, A; Klepsch, J; Mauerer, W; Reuber, G; Strohm, T; Yin, M; Luber, S . Neutral Atom Quantum Computing Hardware: Performance and End-User Perspective. EPJ Quantum Technology 10 (1) , 32 (2023) . doi:10.1140/epjqt/s40507-023-00190-1

- 13.

- Pezzagna, S; Meijer, J . Quantum computer based on color centers in diamond. Applied Physics Reviews 8 (1) , 011308 (2021) . doi:10.1063/5.0007444

- 14.

- Cory, D; Price, M; Maas, W; Knill, E; Laflamme, R; Zurek, W; Havel, T; Somaroo, S . Experimental Quantum Error Correction. Phys. Rev. Lett. 81 (10) , 2152–2155 (1998) . doi:10.1103/PhysRevLett.81.2152

- 15.

- Linke, N; Gutierrez, M; Landsman, K; Figgatt, C; Debnath, S; Brown, K; Monroe, C . Fault-tolerant quantum error detection. Science Advances 3 (10) , e1701074 (2017) . doi:10.1126/sciadv.1701074

- 16.

- Knill, E; Laflamme, R; Martinez, R; Negrevergne, C . Implementation of the Five Qubit Error Correction Benchmark. Physical Review Letters 86 (25) , 5811–5814 (2001) . doi:10.1103/PhysRevLett.86.5811

- 17.

- Acharya, R; Aleiner, I; Allen, R; Andersen, T; Ansmann, M; Arute, F; Arya, K; Asfaw, A; Atalaya, J; Babbush, R; Bacon, D; Bardin, J; Basso, J; Bengtsson, A; Boixo, S; Bortoli, G; Bourassa, A; Bovaird, J; Brill, L; Broughton, M; Buckley, B; Buell, D; Burger, T; Burkett, B; Bushnell, N; Chen, Y; Chen, Z; Chiaro, B; Cogan, J; Collins, R; Conner, P; Courtney, W; Crook, A; Curtin, B; Debroy, D; Barba, A; Demura, S; Dunsworth, A; Eppens, D; Erickson, C; Faoro, L; Farhi, E; Fatemi, R; Burgos, L; Forati, E; Fowler, A; Foxen, B; Giang, W; Gidney, C; Gilboa, D; Giustina, M; Dau, A; Gross, J; Habegger, S; Hamilton, M; Harrigan, M; Harrington, S; Higgott, O; Hilton, J; Hoffmann, M; Hong, S; Huang, T; Huff, A; Huggins, W; Ioffe, L; Isakov, S; Iveland, J; Jeffrey, E; Jiang, Z; Jones, C; Juhas, P; Kafri, D; Kechedzhi, K; Kelly, J; Khattar, T; Khezri, M; Kieferová, M; Kim, S; Kitaev, A; Klimov, P; Klots, A; Korotkov, A; Kostritsa, F; Kreikebaum, J; Landhuis, D; Laptev, P; Lau, K; Laws, L; Lee, J; Lee, K; Lester, B; Lill, A; Liu, W; Locharla, A; Lucero, E; Malone, F; Marshall, J; Martin, O; McClean, J; McCourt, T; McEwen, M; Megrant, A; Costa, B; Mi, X; Miao, K; Mohseni, M; Montazeri, S; Morvan, A; Mount, E; Mruczkiewicz, W; Naaman, O; Neeley, M; Neill, C; Nersisyan, A; Neven, H; Newman, M; Ng, J; Nguyen, A; Nguyen, M; Niu, M; O'Brien, T; Opremcak, A; Platt, J; Petukhov, A; Potter, R; Pryadko, L; Quintana, C; Roushan, P; Rubin, N; Saei, N; Sank, D; Sankaragomathi, K; Satzinger, K; Schurkus, H; Schuster, C; Shearn, M; Shorter, A; Shvarts, V; Skruzny, J; Smelyanskiy, V; Smith, W; Sterling, G; Strain, D; Szalay, M; Torres, A; Vidal, G; Villalonga, B; Heidweiller, C; White, T; Xing, C; Yao, Z; Yeh, P; Yoo, J; Young, G; Zalcman, A; Zhang, Y; Zhu, N . Suppressing quantum errors by scaling a surface code logical qubit. Nature 614 (7949) , 676–681 (2023) . doi:10.1038/s41586-022-05434-1

- 18.

- Bluvstein, D; Levine, H; Semeghini, G; Wang, T; Ebadi, S; Kalinowski, M; Keesling, A; Maskara, N; Pichler, H; Greiner, M; Vuletić, V; Lukin, M . A quantum processor based on coherent transport of entangled atom arrays. Nature 604 (7906) , 451–456 (2022) . doi:10.1038/s41586-022-04592-6

- 19.

- Egan, L; Debroy, D; Noel, C; Risinger, A; Zhu, D; Biswas, D; Newman, M; Li, M; Brown, K; Cetina, M; Monroe, C . Fault-tolerant control of an error-corrected qubit. Nature 598 (7880) , 281–286 (2021) . doi:10.1038/s41586-021-03928-y

- 20.

- Kelly, J; Barends, R; Fowler, A; Megrant, A; Jeffrey, E; White, T; Sank, D; Mutus, J; Campbell, B; Chen, Y; Chen, Z; Chiaro, B; Dunsworth, A; Hoi, I; Neill, C; O'Malley, P; Quintana, C; Roushan, P; Vainsencher, A; Wenner, J; Cleland, A; Martinis, J . State preservation by repetitive error detection in a superconducting quantum circuit. Nature 519 (7541) , 66–69 (2015) . doi:10.1038/nature14270

- 21.

- Bluvstein, D; Evered, S; Geim, A; Li, S; Zhou, H; Manovitz, T; Ebadi, S; Cain, M; Kalinowski, M; Hangleiter, D; Bonilla Ataides, J; Maskara, N; Cong, I; Gao, X; Sales Rodriguez, P; Karolyshyn, T; Semeghini, G; Gullans, M; Greiner, M; Vuletić, V; Lukin, M . Logical quantum processor based on reconfigurable atom arrays. Nature 626 (7997) , 58–65 (2024) . doi:10.1038/s41586-023-06927-3

- 22.

- Reichardt, B; Paetznick, A; Aasen, D; Basov, I; Bello-Rivas, J; Bonderson, P; Chao, R; Dam, W; Hastings, M; Paz, A; Silva, M; Sundaram, A; Svore, K; Vaschillo, A; Wang, Z; Zanner, M; Cairncross, W; Chen, C; Crow, D; Kim, H; Kindem, J; King, J; McDonald, M; Norcia, M; Ryou, A; Stone, M; Wadleigh, L; Barnes, K; Battaglino, P; Bohdanowicz, T; Booth, G; Brown, A; Brown, M; Cassella, K; Coxe, R; Epstein, J; Feldkamp, M; Griger, C; Halperin, E; Heinz, A; Hummel, F; Jaffe, M; Jones, A; Kapit, E; Kotru, K; Lauigan, J; Li, M; Marjanovic, J; Megidish, E; Meredith, M; Morshead, R; Muniz, J; Narayanaswami, S; Nishiguchi, C; Paule, T; Pawlak, K; Pudenz, K; Pérez, D; Simon, J; Smull, A; Stack, D; Urbanek, M; Veerdonk, R; Vendeiro, Z; Weverka, R; Wilkason, T; Wu, T; Xie, X; Zalys-Geller, E; Zhang, X; Bloom, B . Logical computation demonstrated with a neutral atom quantum processor. (2024) . doi:10.48550/arXiv.2411.11822